Popular Tools by VOCSO

This is an imperative process to undertake regularly, as it encompasses everything that’s associated with your search rankings – i.e. SEO. You need to remain competitive within the market and nowadays, harnessing the potential of SEO is the way forward. So by conducting such reviews, you can observe where you’re at and what you need to improve to successfully compete with (and even oust) your rivals. This SEO audit for a website needn’t be a lengthy procedure and can be completed conveniently in order to fulfill Google’s algorithms and your potential customer’s needs. It will also verify your backlink profile and any existing content to ensure optimal search performance as well as visibility. Consequently, your sales and profits will be preserved if not grow. So let’s take a look at what needs to be done, how, when (how often), why, and where…

Table of Contents

What is an SEO audit?

Content needs to be pertinent to your site and contemporary methods have done away with former protocol which has now since become obsolete. Mobile-optimized and ergonomic sites are becoming the norm and the associated SERP ranking is based on the accessibility. Don’t forget that algorithmic crawling pathways scrutinise your site for this very purpose to ascertain how it should be ranked (according to these qualitative factors). These include page load speeds, security, and user ergonomics.

Be mindful of the continually developing algorithms with their respective updates that need to be satisfied to keep on top of your game. Any existing material which you have needs to be reviewed and if necessary, updated as well. Links may need to be renewed or fixed. This helps to maintain pace with competitors, evolving trends, expectations, and progress. It’s advisable to conduct a quick audit on a monthly basis and a comprehensive one quarterly. SEO scoring, efficiency, any required modifications, addressing and reinforcing vulnerabilities as well as futureproofing your site are all imperative considerations to be made.

Why is an SEO audit important?

Every website needs to follow some quality guidelines offered by search engines like Google. These quality guidelines or algorithms are constantly changing because Google is always providing quality pages in their search results to delight their customers. Now you understand if we are willing to rank our website pages in SERPs then we need to think about Google quality guidelines and the needs of our customers.

It’s not easy to understand these things without a successful SEO audit. A website SEO audit report ensures how strong your website is. If you want a strong website in terms of technical SEO, authoritative content, quality backlinks, brand value, and much more to get found in Google to drive enough organic traffic to your site, from which leads can subsequently be converted and generate sales. These are a few of the importance of a website SEO audit. Here are furthermore:

- Search Algorithms: Search engine algorithm updates to produce optimal results, so tweak accordingly and improve your searchability.

- Webmaster tool guidelines: these are also continually revised to avoid any errors or broken links (which will emerge upon these checks). You can then set up redirects to preserve traffic.

- Titles and meta data: Title tags and meta descriptions are preliminary visible features that visitors will see in the search results. These must be related to your site content.

- Outdated content: any such material needs to be revised or updated to ensure that search engines can crawl accordingly. This will ensure that your ranking is upheld and that visitors are retained by revisiting your site. A timeline threshold of around six months is recommended to review this.

Factors that affect your SEO audit performed

The main factors which can impact your site’s SEO audit fall into three categories:

1. Technical factors

Aspects such as hosting, accessibility indexing & page loading speed need to be analyzed to establish a robust foundation to build upon. Your robots.txt file and meta tags need to be manually verified, as webmasters may have accidentally restricted or blocked access to specific sections. XML sitemaps help to guide crawlers, however, should have appropriate formatting, ready for submissions to your webmaster tools account for access. Good site architecture allows easy navigation from the landing to the destination page. Lesser clicks from origin to destination mean easier crawling and access. Be careful with redirects as they can mislead or throw off crawlers.

Optimize your website speed and mobile interface with full functionality to retain visitors. In case of any penalty, simply address the issue and request a reconsideration from Google, although poor indexing or slow accessibility could be the reason.

By implementing all of the technical SEO factors and you can see a significant improvement in your website’s ranking.

2. On-page factors

Includes site structure, content, target keywords, and website performance in respective terms, such as design, speed, redirection, code issues, etc. All subjects discussed should be coherently aligned to ensure SEO harmony. Avoid indulging in monetizing product promotion, as this can be distracting. Bloggers tend to experience keyword cannibalisation and duplicate content, as they focus solely on popular high-ranking topics. This can be perplexing and overwhelm the indexing process, leading to false overestimates. Multiple pages containing identical keywords can lead to several redundant and unreachable results.

Prose should be well structured and URLs need to be crisp, contain your main keyword, and be hyphenated if required. The same applies to your title and you should aim for around 500 words to add value, be distinctive, have LSI keywords, be grammatically accurate, and flow well. Image optimization for searches and decent meta descriptions can boost conversion rates. External links can instill more confidence and entrust your audience with credible sources.

On-page SEO is all about optimizing your website’s content and structure to make it more visible and relevant to search engines. By improving your on-page SEO, you can increase your chances of ranking higher in search results and getting more traffic to your site. There are many on-page SEO tools available to help improve your website’s ranking in search engine results pages (SERPs).

Off-Page factors

This entails backlinks and external references from other sites to yours. Domain versatility to improve visibility is another important factor. These are linked to your site’s popularity, impressions, linking sources, credibility, and avoiding SEO blacklisting to uphold your reputation. Increased traffic, social integration, greater browsing duration, lower abandonment rates, and better reactions are what to aim for. Hence, engagement needs to be aligned with your linking strategy to avoid being unnecessarily flagged by Google.

Audits for all of these factors need to be conducted at regular intervals. This ensures that you’re always updated with evolving industry trends. A pivotal factor to remember is the mobile readiness of your site. Increasing usage of mobile devices means that greater weightage is given to this.

What steps should you follow to conduct an SEO audit?

Let’s read step by steps that you should follow to perform a website audit:

#1. Determine how SEO fits into your overall marketing strategy

As SEO trends continue to evolve, it’s essential to fully understand how your SEO methodology works in tandem with marketing. Many companies invest heavily in Google search criteria and now even social media integrations have become more financially weighted. Hence your spending is somewhat proportional and correlated to SEO success. You need to determine what’s required to promote your brand by asking yourself that at what stage you intend to tap your customers. Is it prior to purchasing with sales tactics to entice them or at PoS (Point of Sale)? Without this clarity, you’ll experience difficulty in gauging your performance. In the case of targeting customers during their decision-making process, things become much simpler. Software integrations on landing pages reinforce this concept with SERPs, as this linking easens ranking, particularly with organic searches.

#2. Do some quick Panda & Penguin checks

What are these I hear you ask? These are algorithm updates designed by Google to serve higher fidelity sites via SERPs. While Panda scrutinises content and banners, Penguin evaluates whether the links are natural and logical (or not). For gauging Panda, simply review your site for banners (there should be a sizable quantity of them). Furthermore, is your recent offer occupied most of your screen as content, as you need to strike a balance. Otherwise, excessive banners before needing to scroll down are merely redundant. Regarding Penguin, try Majestic’s SEO tool to verify your backlinks quickly and discover whether any spurious sites are linking back to your brand. If so, proceed and disavow those sites in your Google Search Console. Finally, review your page titles and substantiate them with more punchy taglines.

#3. Check that only ONE version of your site is browseable

Ensure that there’s just one version of your brand’s site available online at any given time (live and accessible) by considering every possible way anyone could enter your site URL into a browser (or link to it), e.g.

http://yourdomain.com

http://www.yourdomain.com

https://yourdomain.com

https://www.yourdomain.com

Of these, only one should exist (and work), whilst the others should be 301 auto-redirected to the canonical version (note this down). Do test this with alternative site links to ensure a blanket implementation. Also ensure that you try to use HTTPS, as this instills further trust within the user and SSL certification boosts rankings plus security. Free certificates are available from Let’s Encrypt.

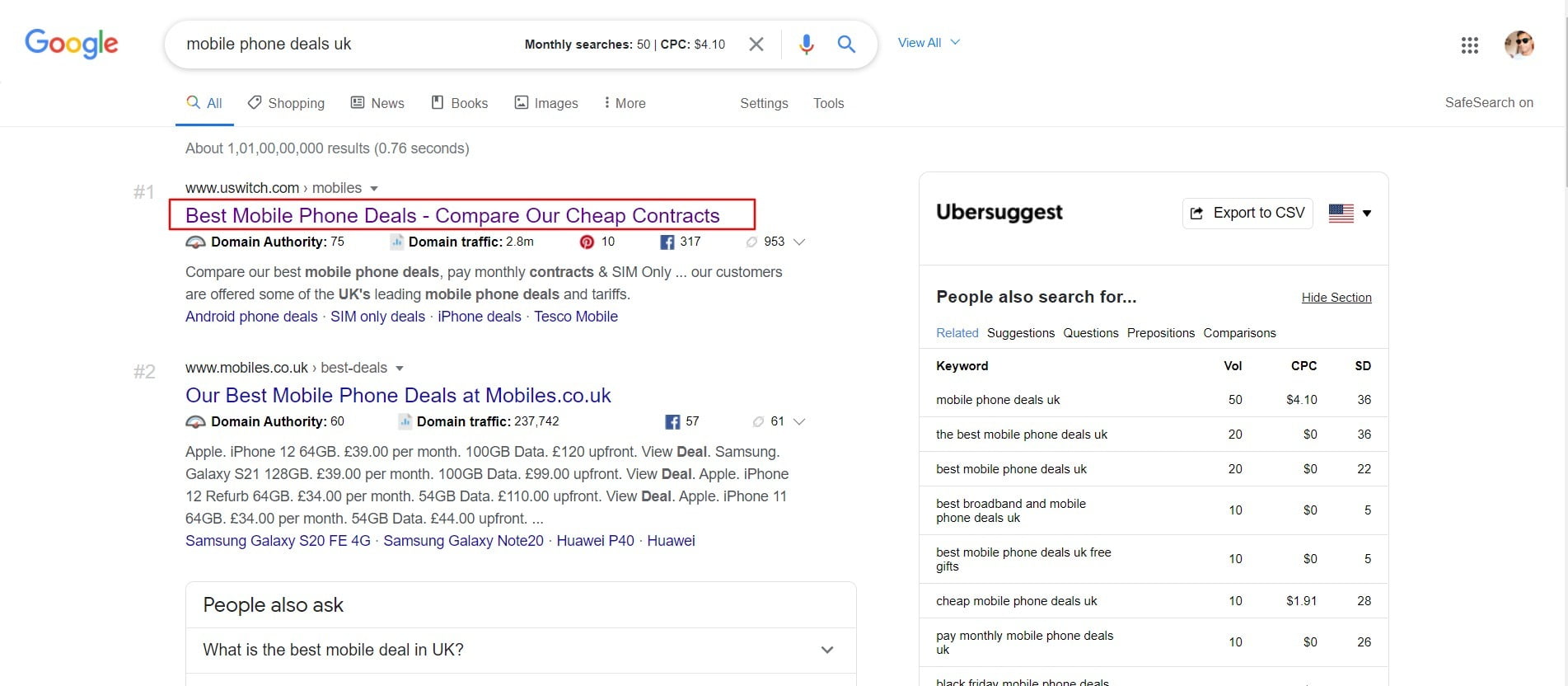

#4. Competitive analysis and keyword research

These are essentially identical, sparing that keywords are the minutest entity in SEO, however, when analyzed overall, you can compare against your competitors. This enables you to discover which keywords are untouchable, the traffic, and the difficulty. Obviously, traffic denotes the volume of searches performed (typically within a period of a month), whilst difficulty is how easily your site can be ranked. Ideally, both of these factors should be moderated, although traffic may need to be boosted to oust your fellow rivals. Auditors need to be mindful of this prior to advising on which keywords to deploy. Various SEO analysis tools such as Google keyword planner are optimal free choices, although paid services like Ahrefs are great too.

#5 Start by crawling your website

This should always be the first step for any SEO audit and tools such as SEMrush, Spyfu or DeepCrawl can help you do this. These crawlers highlight errors such as broken links, page title issues, poor images, and keywords. Furthermore, they discover duplicate content, excess redirects, and unlinked pages. The Google Search Console can calculate your crawl budget, which is the page volume that the Google bot crawls on your site and how often. In order to maximise this budget, ensure to:

- Eradicate duplicate content: such pages unnecessarily occupy your crawl budget. Tools like Screaming Frog can find these, as they tend to have identical title and meta description tags. If something needs to be retained, block them from Google’s bots to avoid being detected

- Moderate indexation: Pages including Privacy Policy and Terms and Conditions don’t require listing in search results. These can be disallowed from indexing to save on your crawl budget

- Provide URL parameters: Ultimately Google may duplicate crawl with(out) URL parameters, treating them as two separate pages. You can always instruct Google to perceive this as just the same page to avoid such issues from arising

- Fix redirects: Each redirects that the Google bot follows wastes your budget and if there are multiple 301 or 302 redirects, then they may not be followed nor reach the destination page

Decrease your redirect volume to optimize your crawl budget.

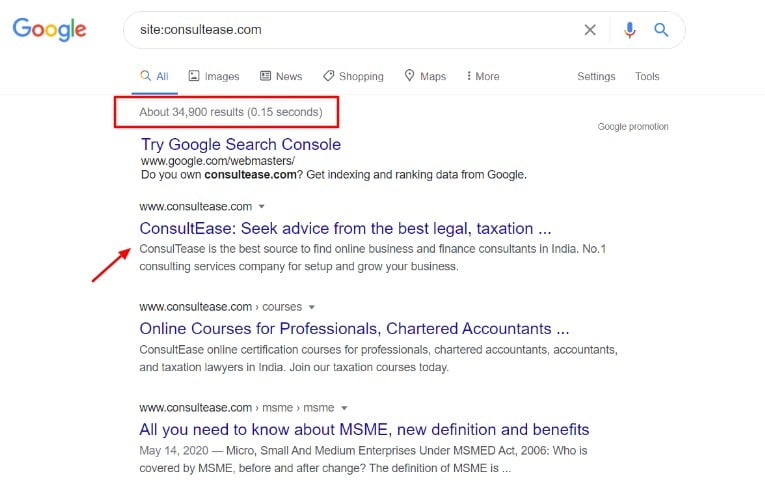

You can also manually search for your site on Google by entering “site:domain name” into the search criteria and evaluating your results. You’ll then be able to see just how many of your site’s pages return. Any pages which are omitted may indicate what’s wrong and how to increase your crawlability. You can also check your SEO score with the likes of sites such as SEO Site Checkup. This is expressed out of 100 and can highlight which are the most popular keywords and source broken links, as well as coding errors. Moreover, you can verify whether the sitemap and robots.txt are there for your site. Even any malware or evidence of phishing is considered and can affect your score.

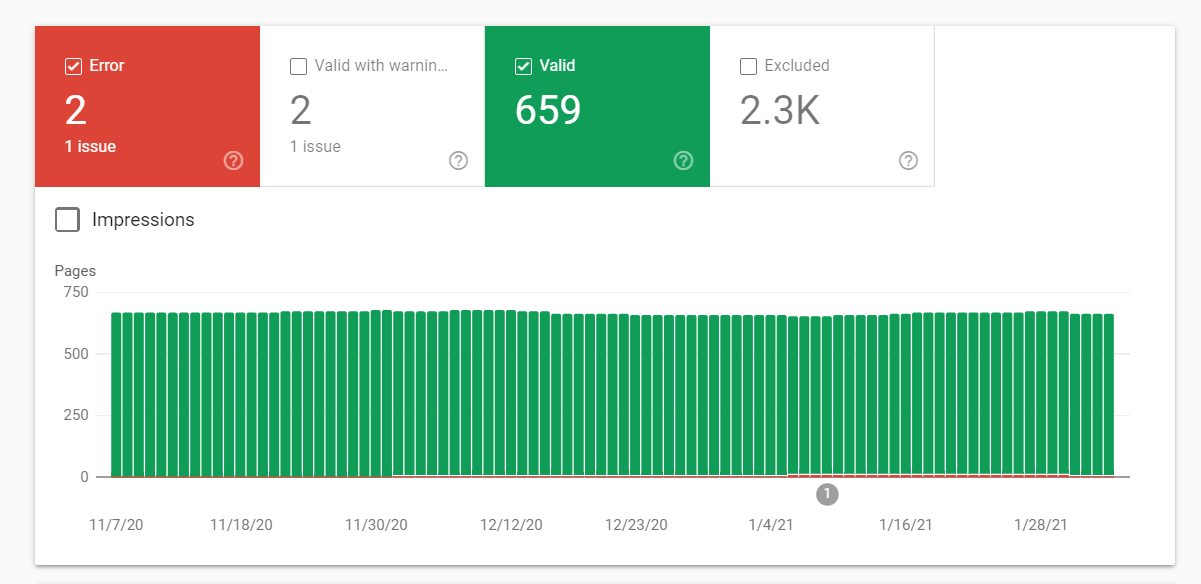

#6. Check Google for indexation issues

If your site is devoid of indexing, then you won’t be ranked and will lose any potential organic traffic! The culprit is often a rogue x‑robots-tag HTTP header.

For this, you will need access to your site’s header.php, .htaccess, or server access file. In order to verify this, simply visit:

Google Search Console > Index > Coverage

If you’re not using this, then simply perform a Google search by inputting this formatted criteria ‘site: search operator’. It’ll then return with the volume of indexed pages (although not as reliable as the search console itself). You should compare this with the number of internal pages discovered during the site crawl and may need to allow the process to complete before proceeding. SImply navigate to Site Audit > Project > Internal pages. Errors will be revealed by the ‘noindex’ tag denotation.

Furthermore, run an HTTP status check to reveal any broken links or redirects and identify any page volume discrepancies. Any excessive ones would turn out to be broken and should therefore be removed from indexing altogether. Duplicate content can easily be removed by tools such as Screaming Frog. If meta tags are overly short or lengthy and with broken images, then this can prove to be problematic as well. CMS systems such as WordPress publish category pages by default. Despite doing so separately, these may still appear duplicated in SERPs. A plugin such as SEO by Yoast can rid you of these issues by concealing such classification from the search results.

#7. Check that you rank for your brand name

Visit Google and enter your brand name – it should appear as the initial organic search result and be your homepage (the exception being if your site has just been recently launched or the name covers universal criteria). In case you rank lower than this, then it’s likely that Google considers and gives preference to another site which it deems as being more suitable. This is why you should be mindful of using generic words or phrases as your allocated name, as visitors will avoid seeing your site during a search. To combat this, you can establish your brand and links, such as running PR campaigns with mentions and references from reputed sites. Furthermore, business directory citations, Google Business listings & good social media coverage.

Search intent always promotes stellar results and by doing all of the aforementioned, your ranking will albeit gradually increase. Consequently, the other competing results will decline, however have you ever considered what happens if you’re devoid of SERPs? Upon excluding indexing issues, then evaluate intricacies like algorithmic or manual penalties. These can be checked via Google Search Console which should confirm ‘no manual webspam actions found’.

#8. Evaluation of content

This entails evaluating your site content’s effect on promoting business via organic visitors. User content and search engine analysis can optimize with target keywords. Also check for duplicate content issues such as meta descriptions, page titles, and target keywords. Such parameters can be deployed to formulate an effective SEO content strategy. This will augment designing a robust and sustainable roadmap for your content team, ensuring that it’s apt for everyone.

#9. Consider Architecture and Design of a Website

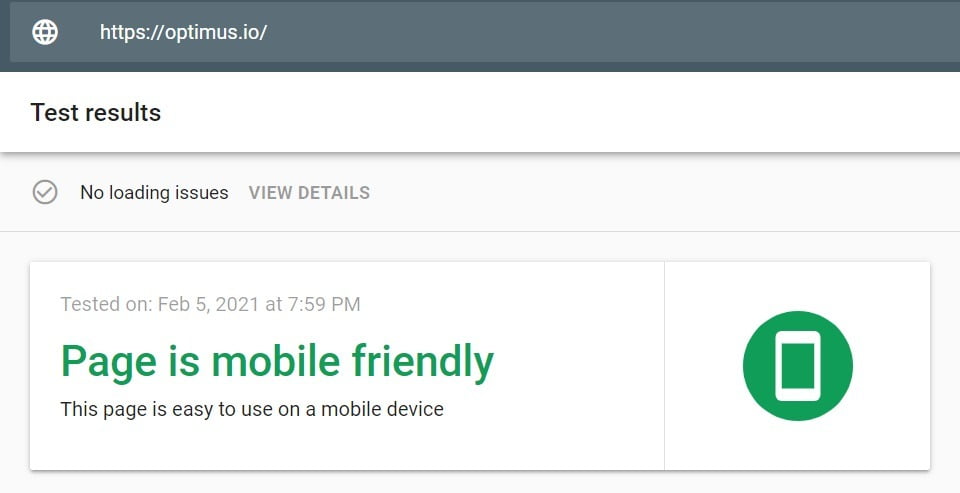

Google envisions a safe and pleasant experience for its users, hence review your visitor journey. Whether it’s the mobile ergonomics, accessibility or security with HTTPS, these all need consideration. All these factors should be positively primed to satisfy Google’s algorithms. Use the mobile friendly test tool to check this.

#10. Low-quality content

Ever heard the principle of paying heed to quality rather than quantity? Well removing excessive page results (AKA ‘content pruning’) can actually do wonders for your traffic. Simply purge redundant content and perform manual searches to exclude poorly performing SEO pages. Multiple indexed pages and their types via a CMS where even a simple setting can be adjusted to address the issue (instead of deleting them). A neat and coherent site is music to Google’s ears, sporting a bespoke masterpiece!

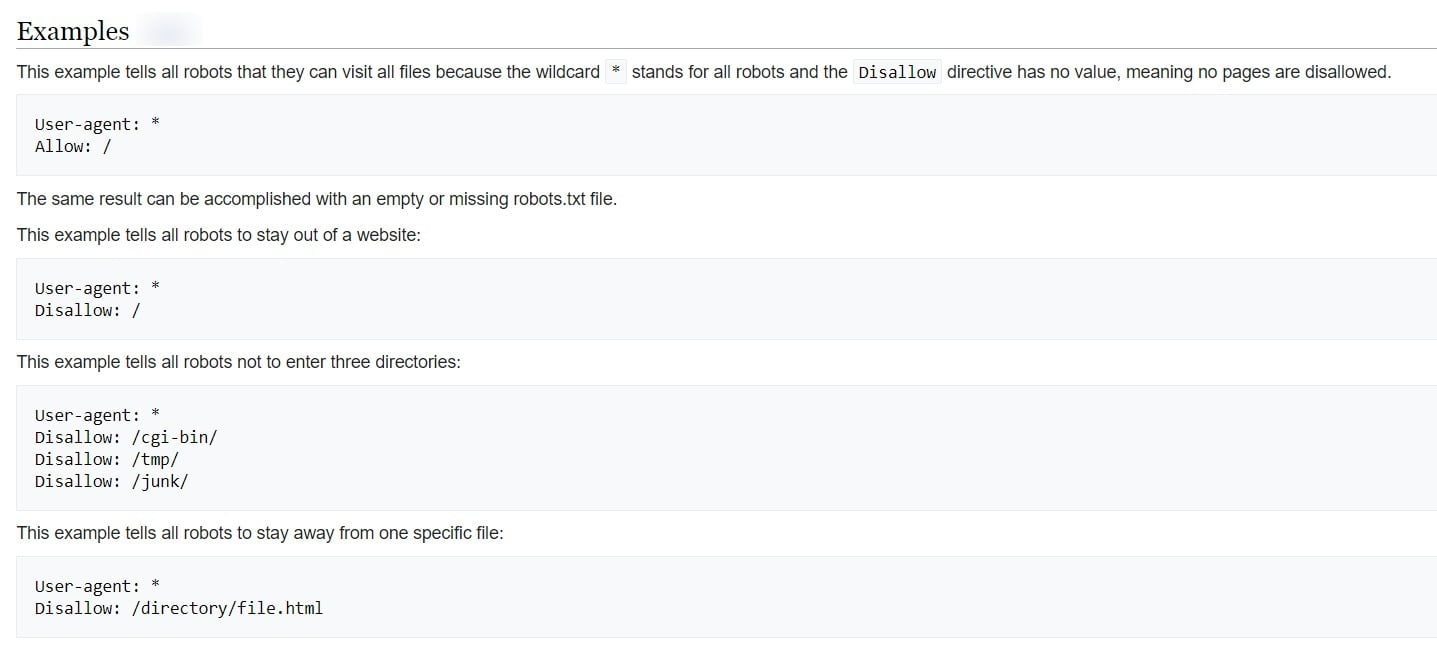

#11. Using Robots.txt and Robots meta tags to resolve technical issues

How the Robots.txt file functions is essential in any SEO audit, as this informs Google and other web crawlers to index pages on your site, enabling bots to discover them. You can also instruct Google which parts you don’t wish to crawl (such pages are still indexed if something links to that page). Glitches can cause Google to deindex several pieces of content rapidly. Services such as Technical SEO’s text checker automatically verifies your Robots.txt for any modifications and notifies you accordingly to avoid such mishaps, as this can have an irreversible effect on traffic.

For de-indexing particular pages, Google advises using robots meta tags

These deploy a granular, page-specific approach to control how a specific page needs to be indexed and presented to users in search results. Position the robots meta tag in the section of any page, like so:”

“The aforementioned robots meta tag instructs the majority of search engines to conceal a certain page in search results. Name value attribute (robots) specifies that the instruction applies across all crawlers. For a particular crawler, substitute the robots value of the name attribute with the crawler that you’re addressing. These are also known as user-agents (as a crawler delegates its user-agent to request a page.) Google’s default web crawler has assigned the user-agent name Googlebot, hence to prevent restricting Googlebot from crawling your page, update the tag as follows:”

“This tag will now instruct Google (however no other search engines) to avoid reflecting this page in its web search results. Both name and content attributes are non-case sensitive.”

Despite Google not officially supporting the Noindex principle, in the robots.txt file, the command does work with other alternatives, most notably Wayfair. Just remember that your mileage is subject to vary when applying this way. Try to ensure that robots.txt isn’t blocked to enable Google to crawl the page with robots meta tags.

#12. Perform an image optimization audit

In an increasingly visual online world, it’s important to hone your imagery, graphics and any videos to maximise their impact on audiences. Furthermore, with an exponential rise in mobile device usage, it’s no wonder that page load times need to be curbed to ensure retention. This contributes to the SEO and user experience as well. By creating an ergonomic and feature packed pleasant journey, people are more likely to spend longer on your site. As images can be data intensive and dense in terms of funding, it’s imperative that you invest wisely. Moreover, such images should have a synergistic effect on your brand – not a detriment to the site!

What’s more, image optimisation works in tandem with (targeted) keyword research, meaning that good images can help your site to be more easily found. Firstly, use a service such as Screaming Frog to create an image inventory. Simply set it to ‘spider’ mode and leave the other settings as they are. Next, input your URL and begin the crawling process. Access the images section to visualise the report for both intrinsic and external assets with HTML integration and a CDN (Content Delivery Network) to host your images. Avoid solely or heavily relying upon externally sourced images, as they can be unreliable and subject to change. You need autonomy over the servers which control and store such media. An alternative is URIValet which caters for the CSS based background logos, banner images and icons.

Next we need to focus on formatting and size to ensure that page load speeds are upheld, rather than hindered. Running diagnostic tests to evaluate the need and benefit of retaining or purging content can be a lifesaver. For instance, redundant images can be substituted with lightweight CSS webfonts. Regarding the format, there are two to choose from: vector and raster. Which one to opt for depends on the image features, so the former serves simple geometric shapes (saved as SVG), whilst the latter is for those with greater detail in terms of colour and design (JPEG, PNG or GIF). You can always review your Screaming Frog report to decide on the suitable format (by accessing the content section).

Compressing and resizing images (adjustment) is the optimal way to boost site load times (apart from caching, minifying CSS and blocking external requests). Pixel density (and the data per byte) yielding total pixels can both be reduced, subsequently reducing latency. You can always access your preferences in Screaming Frog to limit the image size to around 75 – 100 KB which is ideal (achieving a balance between quality and speed). Then crawl and review which need compression. Always try to retain the natural image size to maintain the original aspect ratio and crop where necessary.

Another aspect is filenames: the address just before the file extension should be pertinent and correspond with keyword search. Refrain from using complex and superfluous words and complex special characters, such as underscores (try hyphens and normal letters instead). Shorter URLs are more easily discoverable.

Image info can reveal the alt text material which helps search engines to interpret your site’s image content by checking the image info tab on Screaming Frog to find what’s omitted. Again, don’t overwhelm with keywords, and be sure to apply alt text in every image. Maintain a concise character count of a hundred characters (or less). The text should be kept minimal and deploying CSS to improve SEO may be worth considering.

Manually scan your site to gauge subjective fidelity to evaluate the worthiness of each image. Are they specific to your brand, professionally produced, and actually purposeful? Update and convert using graphics software, such as Adobe’s Creative Suite and Photoshop. For the latter, owing to large volumes, automated compression tools such as PunyPNG and JPEGmini (both paid) and Compress JPEG/PNG (free) are great contenders. Prioritize performance over image detail, although both are important factors in achieving success.

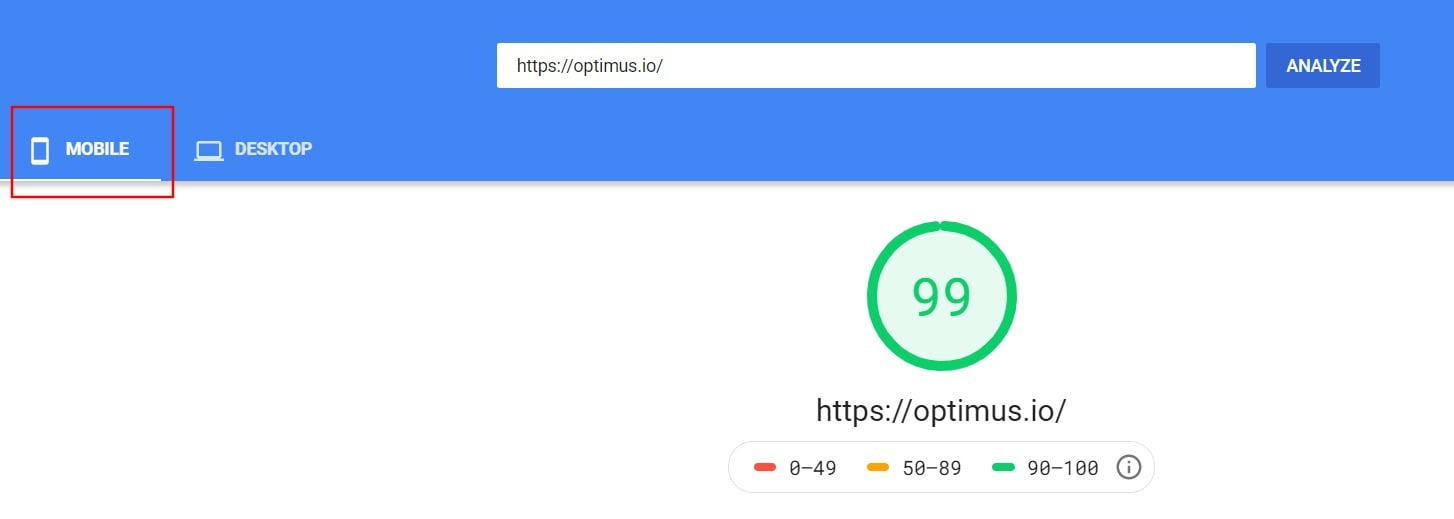

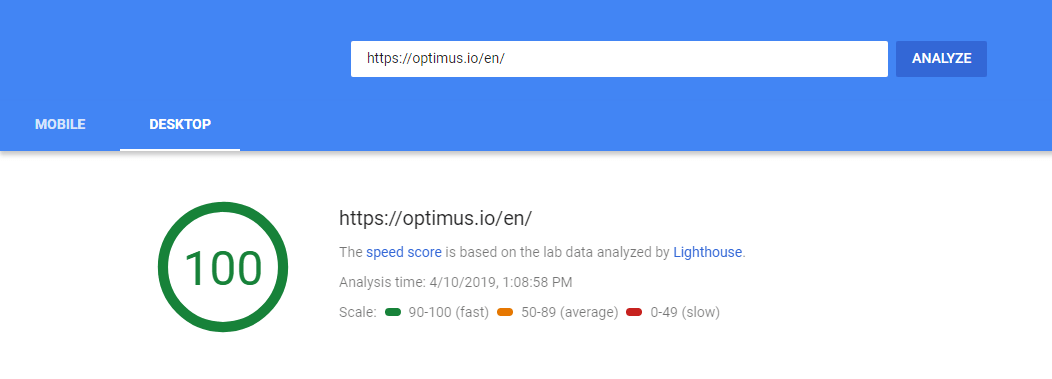

#13. Identify speed & mobile page issues

Page speed is advertised as being a ranking factor for Google, however loading speed and mobile page display issues become obvious hindrances to your profitability as well. Pages which load within two seconds have a mean bounce rate of under 10%, whereas the abandonment of pages taking double the time is quadruple! Upon the establishment of Rankbrain, bounce rates can affect rankings by reducing dwell time – another rank factor. The figures speak for themselves, as almost four-fifths of buyers encountering a poor experience on your site are unlikely to ever return.

Measure your site speed

Tools such as Pingdom’s website speed test can help. Enter the URL, select a location and run the test. Apart from page factors, be mindful that using fidelity servers will accommodate further media content without impacting your speed.

Mileage can vary

Resolving problems may be as elementary as installing a caching plugin (for heavy WordPress installations) plus Lazy Loading scripts, scaling down image and video files, or even as complex and dear as mobile servers.

Gauge mobile-optimisation

Visit Google’s Mobile-Friendly test, enter your URL and run the test. Provided that you’re not using an archaic themed installation of WordPress, the scores should be fine. In case they’re not and you’re oblivious on how to resolve the issue, contact a developer to assist. This will affect your rankings and also influence how each person that lands on your site via SERPs interacts with your online business.

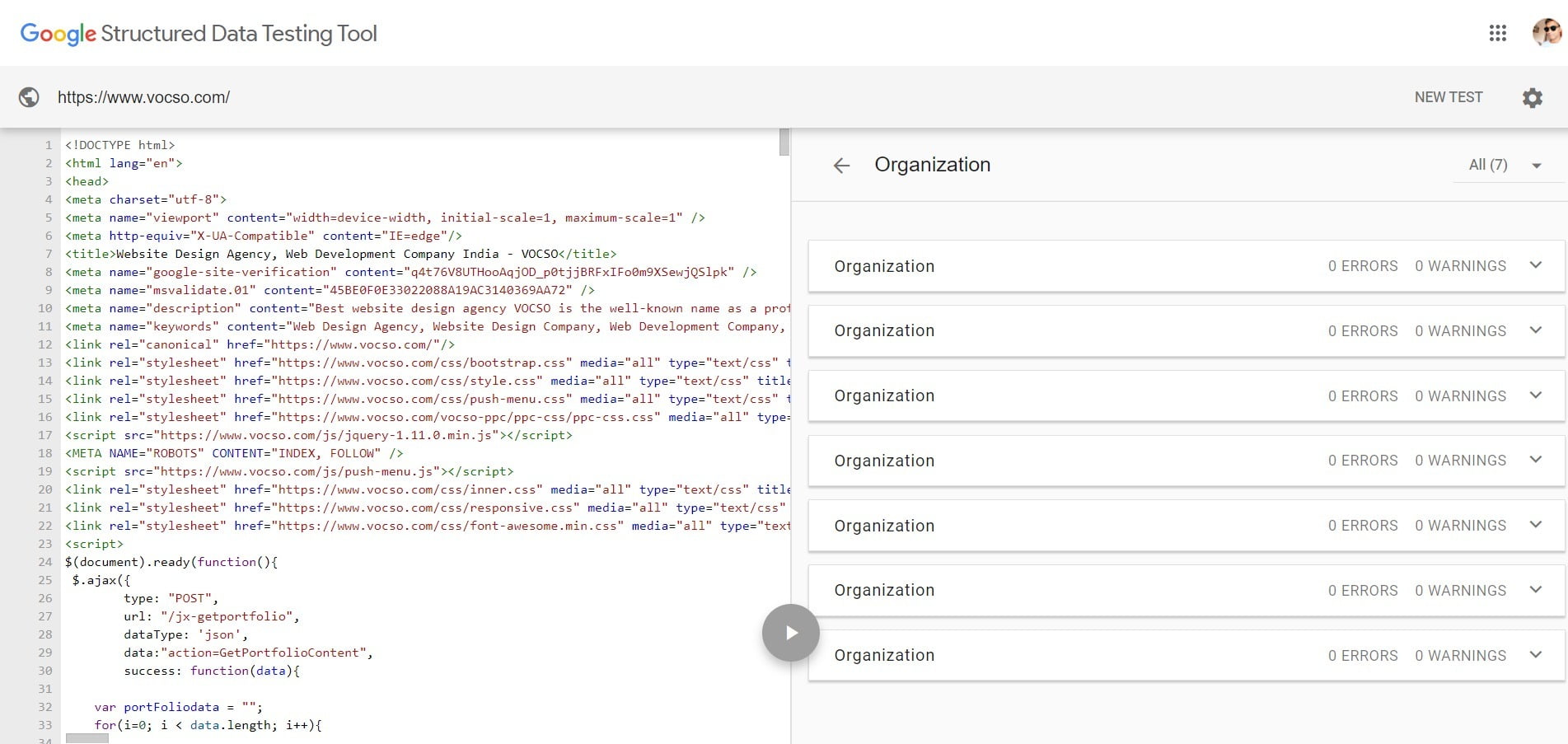

#14. Find structured data errors

Multiple pages on your domain may benefit SEO wise by including structured data. Product and service reviews plus information or description pages. This includes reviewing prospective events that you’ll be involved in, etc.

Visit Google’s Structured Data Testing tool and input the URL of the site to be checked. Execute “Run Test” and Google will analyse the structured data for the domain entered. Furthermore, it’ll reveal any errors discovered simultaneously.

For any errors highlighted, resolve them as per the location advice given. If you designed the site by yourself, revise the code to implement required edits. If someone else built it, share this report to work upon. This’ll guide them accordingly and will have a profound positive effect.

#15. Improve page titles

These should focus on a particular subject and include an end branded phrase, such as a brief site tagline or your brand’s name. Verify and modify titles with Screaming Frog’s scanner feature. Check for any omissions or duplicates here. “Page title branding” can be added wherever possible, as simple as adding your business name title pages. Also create some high-fidelity meta descriptions.

However, coming up with a good title can be difficult. That’s where our free blog title generator comes in! Just enter a few keywords related to your topic, and our generator will come up with a list of potential titles for you to choose from. Best of all, it’s completely free to use! So why not give it a try?

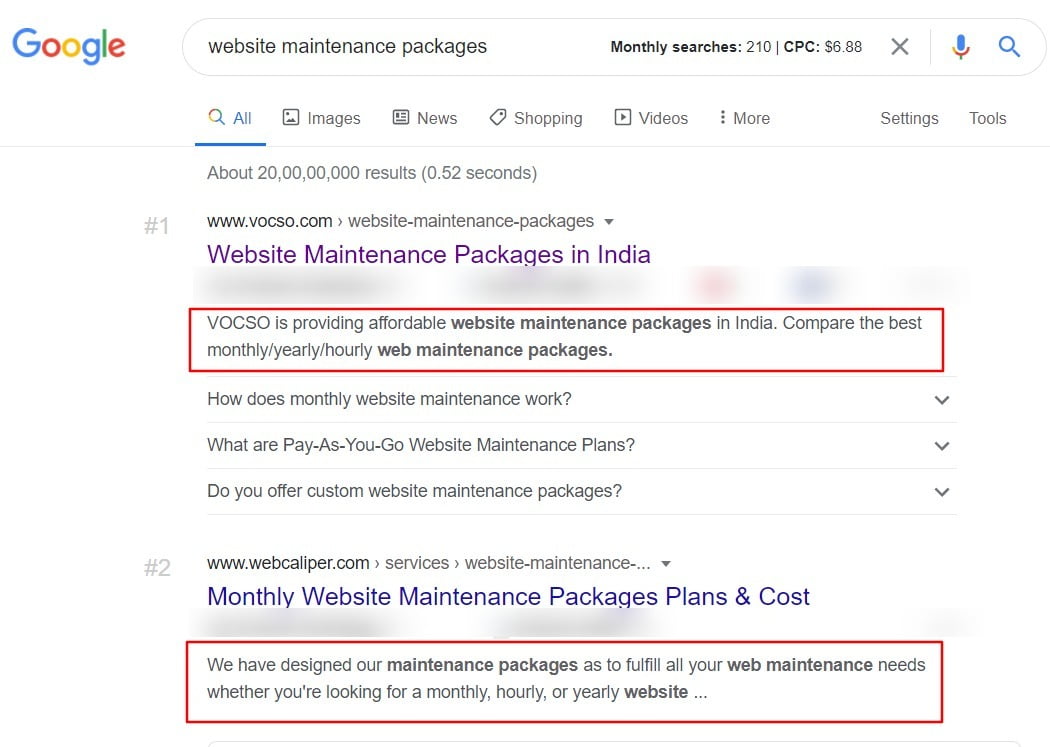

#16. Test your meta descriptions

These are details of the data that your site is presently displaying and needs attention. With this simple underlying principle combined with titles, success becomes easily attainable. Pay heed to this as duplicate tags on similar pages across various sections in your domain can be problematic. Access the Google Search Console and select the option “Search Appearance” then the button labeled “HTML Improvements.” Click the option mentioning “Duplicate Meta Descriptions” to tally the meta tags which need revision.

Be mindful that meta description information is supposed to clarify the content available, hence promoting click through rates (CTRs). The descriptions should be concise and distinctive. Additionally, the “HTML Improvements” window in the Google Search Console can visualise omitted or duplicate tags. Ensure that every title tag is acknowledged and set clear titles which demonstrate what’s on offer plus why to access it.

ABT – Always be testing is also applicable to your titles and meta descriptions too. Test these and monitor your CTRs too.

#17. Heading tags analysis

Headings (h1, h2, h3, h4, h5, and h6) are page headlines and it’s imperative to include pertinent keywords, however, design them for conversion, as they’re noticeable to visitors. You should ask yourself: do you have or need headers on your site (whilst avoiding excessive optimisation)?

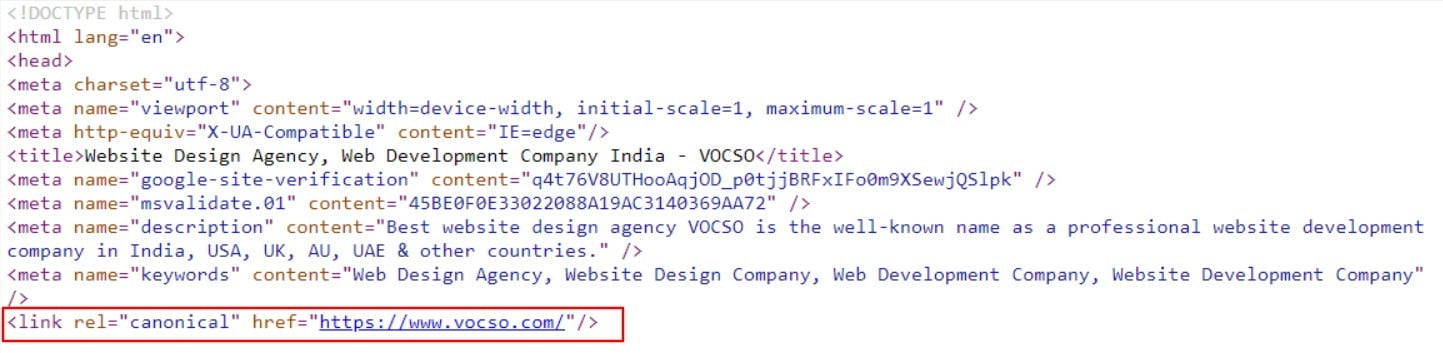

#18. Don’t forget canonical URLs

The canonical URL is the link that informs both Google (and you) about the source of a respective page. You can view these by accessing the “Canonical Link Element 1” tab. Verify if any pages are devoid of canonical URLs and ensure that these correspond with the regular links.

#19. Use of images and videos

These media formats can augment laying themes, presenting a product or service or even directing visitors to specific sections. Ensure to include sufficient volumes of this when required, however strike balance between text and photos. Centre requisite details above your site’s fold.

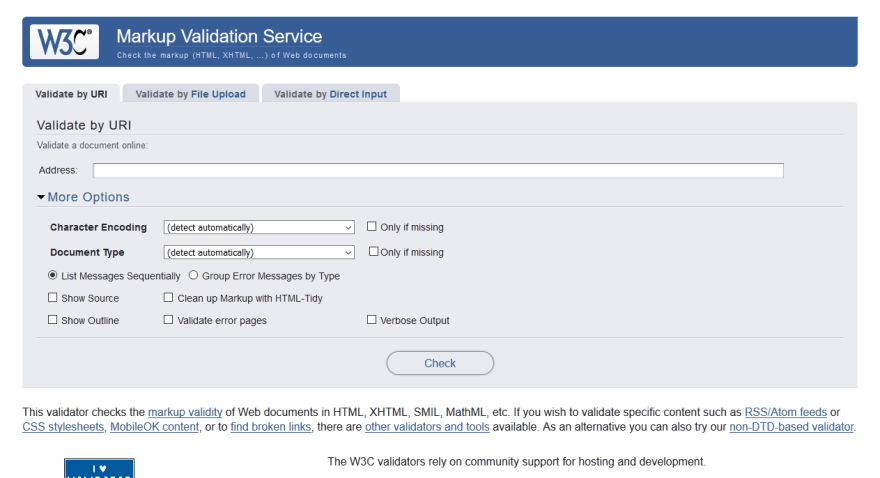

#20. Do a free HTML validation check

This should be an obvious default activity, as checkups are even free and they validate all your site’s HTML coding. Visit the World Wide Web Consortium and input your address to check for errors and receive a results report. Share this with your web developer to edit your pages and resolve “fatal errors” with priority.

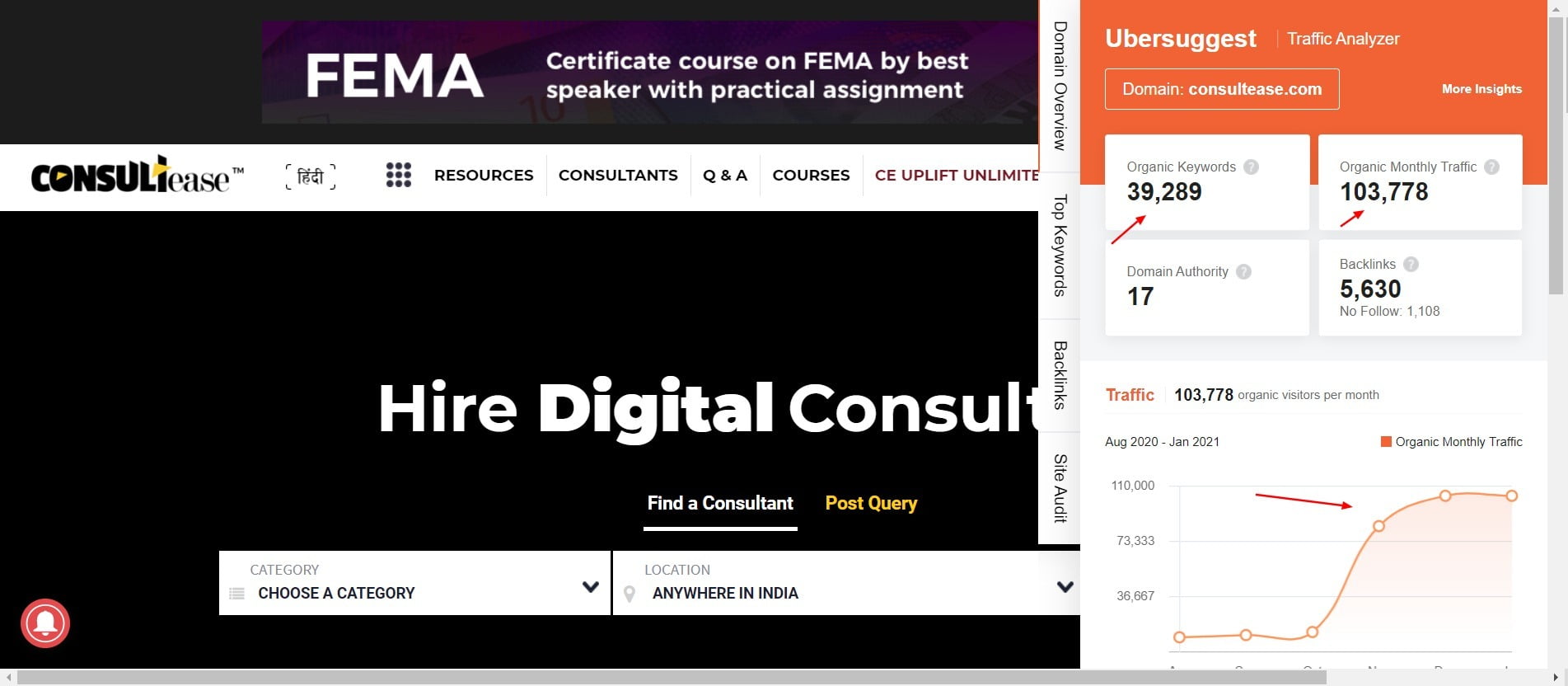

#21. Analyze your organic traffic

The majority of sites obtain their traffic from minor keywords. Long tail searches constitute over two-thirds of all searches performed online. Half of all searches are greater than or equal to three words. This trend suggests that lower volume and long-tail keywords drive the most traffic. Firstly analyse the good keywords that already drive traffic to your site and if you’ve linked Google Analytics with Search Console, you can do it from there. Access the acquisition breakdown and find organic search. Upon reaching this channel, select “Landing Page” as the primary variable (as the keyword view usually unhelpfully displays “not provided”). This’ll summarise the imperative site pages for organic search. You may then check these results against the queries breakdown in Search Console. As Google links queries to landing pages in the Search Console and GA has ceased displaying the keywords searches, this indirect method is the most viable path for doing so.

Keyword tools now provide increased choice, such as SpyFu which is recommended, owing to its comprehensive variables and filtration. Enter your URL and immediately a valuable SEO keyword analysis appears. However, the traffic is approximated, whereas your GA traffic is based on real data stats. The integrated sorter ranks the most valuable keywords in ascending order. These draw in the greatest click through rates (CTRs) from your ranking over them. Monitoring these high-value keywords–and retaining them in the SpyFu project manager will reap frequent performance updates on your most significant phrases.

#22. Perform your blog audit

This is a simple method to include fresh material on your site, promoting greater visibility to be discovered online. Google values this, as do visitors who wish to preview and gauge your knowledge on the subject matter by evaluating your content. Criteria include:

- Your blog’s location: either in a directory such as a blog/URL, a subdomain or even an alternative site. It’s advisable to set in a sub-directory of your own site for maximal autonomy.

- Blog post frequency: Are you publishing blog posts regularly and are these educational plus appealing (rather than just sales or marketing orientated)?

#23. Social media integration

Social media is increasingly influential on SEO and it’s advised to integrate with social media, improving ergonomics as well as distributable content. Followers can reach you easily this way.

Be cognizant of your site branded profiles and share functions (particularly for blog pages).

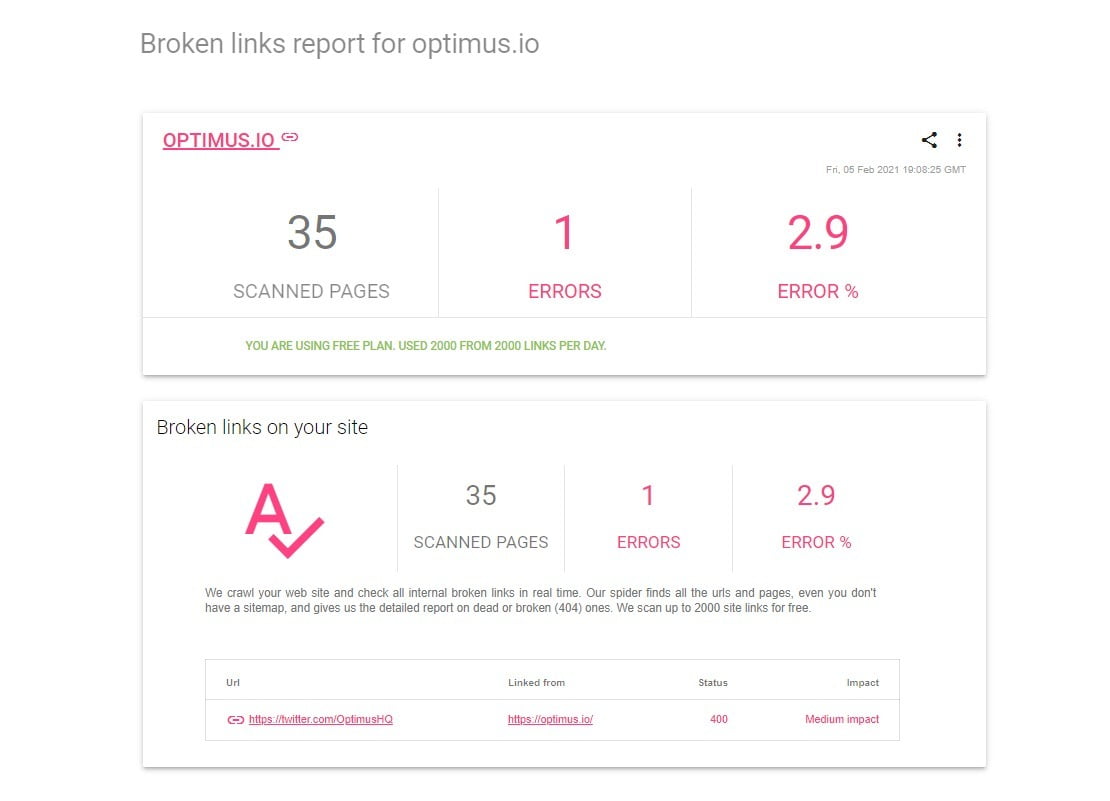

#24. Broken links analysis

Find any such discrepancy and fix ASAP to redirect as well as salvage potentially purged traffic. Don’t forget that this is an SEO ranking factor and tools such as SEMrush can identify such issues, as can the broken link checker plugin for WordPress environments. The latter does operate in the background, meaning that it can hinder speeds somewhat, so beware. Dr Link check is available for other ecosystems and collates a list of upto 1000 sites with broken links. It’s then your responsibility to visit and inspect each one of them respectively.

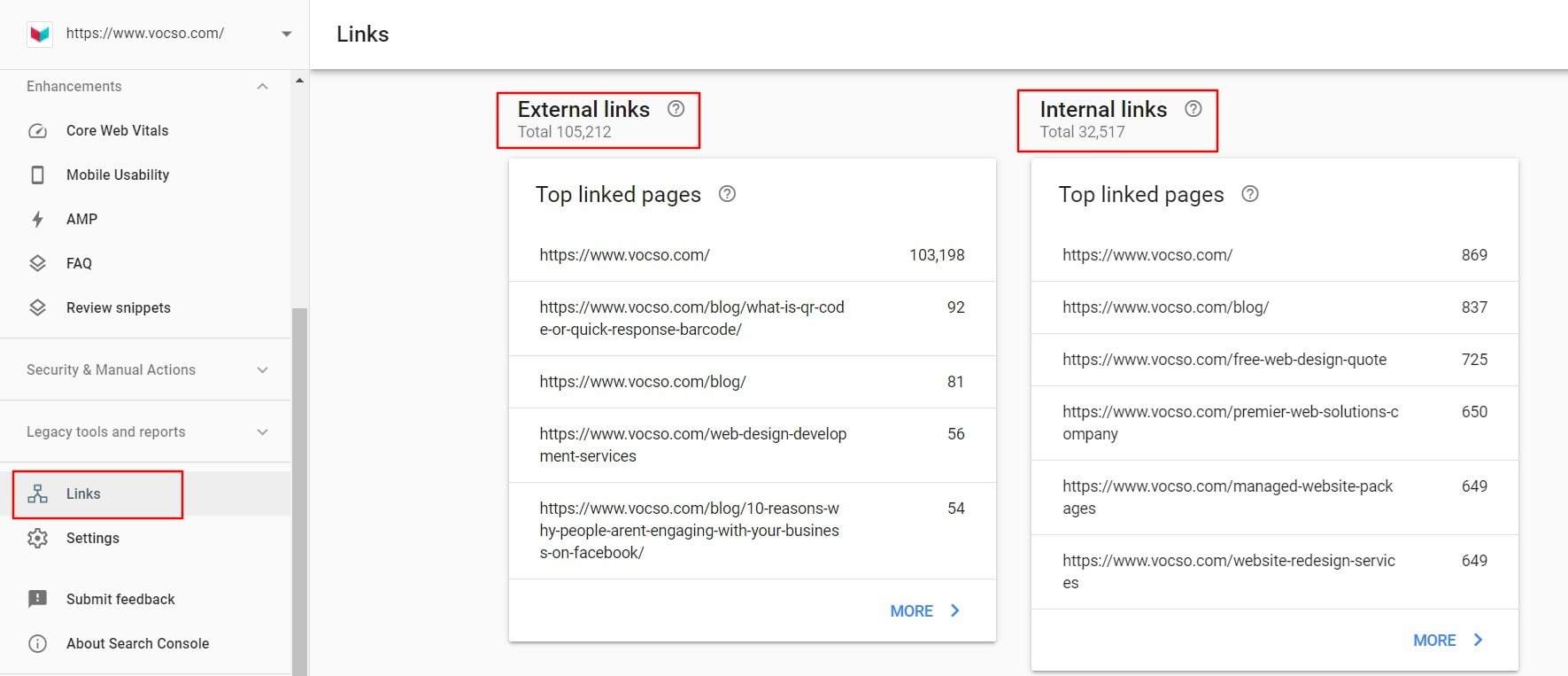

#25. Optimizing your internal links for rank boosts

It’s not just backlinks that matter but internal links as well, which can easily produce up to another two-fifths of traffic for your site. Google’s search console can find these which highlights the significance of specific pages with analysis. The descending list with internal link volume will appear and mostly consist of privacy policies, T&Cs and footer/sidebar links (which tend to rank highly). Filter this to concentrate on pertinent and authentic content. Ensure that the content delivery targeting is balanced with keyword volumes and cornerstone backlinks.

#26. Website BackLink Analysis

Ensuring that you have appropriate links is a potent ranking factor in the Google search algorithm. In order to do this, evaluating how the site is intrinsically linked and externally with dependable third party sites. Tools including Google’s search console can do this for you and shows how the data is related plus identifying significant pages. Other solutions extend this by checking extrinsic links and available avenues to associate these. You can unify these by using Netpeak spider tool by accessing this path: Follow Database > Internal / External links.

Tools needed for the SEO audit

- Google PageSpeed Insights

- Google’s Structured Data Testing Tool

- Google Analytics

- Google Search Console

- Ubersuggest

- Copyscape

- Screaming Frog

- Ahrefs

- Panguin Tool

- Website Penalty Indicator

- Structured Data Markup Helper

Actions you must take after an SEO audit

Although conducting an SEO audit may feel tiring, never become complacent post doing so. You still need to maintain a dynamic outlook to ensure that revenue streams continue to grow, as does ROI. Realising and improving organic search visibility and previous SEO methodology to learn from (as well as hone). Detailed testing surrounding on/off page and technical keyword comparison with rivals. By investing time and money wisely, you’ll boost ranking, traffic, and conversion rates. Engaging in the following activities will help to uphold your efforts:

Develop a list of insights

A comprehensive and customized report will include an itinerary of audited items and their respective standing when gauged as per SOPs, audience plus competitive filters. Furthermore, suggestions to improve or resolve aspects, in the format of integrated advice which is summarised for convenient assimilation. Lightweight or automated audit processes can lead to vague or superficial results. Always prioritise a concise or even detailed list of particular introspections of which some that require attention.

Prioritise based on level of impact

By applying what you gathered and learned from the aforementioned, you can now plan accordingly. In case of someone else undertaking the audit, you can review and gauge the anticipated impact of each entity on your site overall.

Bear in mind that remedial actions across the board will yield various levels of effect. Although it can be difficult to commit to anything specifically, you should still be able to objectively measure and order the items which need attention as per the scale of the actual issue. Having realistic targets of this (albeit according to standards) and your aspirations will assist you in gauging the real effect. For instance, fixing title and meta description tag omissions for all site pages with personalised, handy and keyword focused tags will be more effective. Hence, such items should be given greater priority than applying structured data for a contact us page (for example). Makes sense?

Determine necessary resources

Upon doing the aforesaid, the required time, finances and tools can be ascertained. Specific revisions may be straightforward and undertaken by anyone, whilst others may need intervention by external resources. For example, applying an advanced canonical tag strategy may warrant sound technical SEO acumen and web development skills. Such facilities can be pricey and need timely organisation. Upon knowing the time required for each task, how much it’ll cost and its likely effectiveness, you may reorganise your list accordingly.

Develop a timeline

Be methodical in your approach and plan ahead with regular segmented tasks. Your overall assessment of applying all action items would also be complete by now. As per financial constraints, work rate and availability, you’d be able to calculate approximately how long all tasks would take to complete. Now you can formulate a timescale with particular checkpoints and reviews to gauge the effectiveness of your efforts.

Create an action plan

Actioning a plan would need the appropriate infrastructure ensuring convenient teamwork, task delegation and scheduling as well as ownership. Be it a set of tasks, SEO tools or project management solution, taking this seriously by giving due importance to the audit is the best way forward. Accumulating multiple responsibilities without this is risky and should be avoided. However, planning ahead with realistic expectations to organise and finish as per your budget and within the timeline is essential.

Remember that not every contributor will realise the importance of all this in a segmented approach. Therefore always communicate with everyone to highlight how imperative something truly is and give the importance it deserves!

Achieve success

Have you ever considered what constitutes success and by which means? Reviewing your earlier targets will help to focus your efforts. You can deploy benchmark stats and home in on your schedule and identify mean progress, impressions, traffic, and conversions made throughout. Job done!

Monitor your improvements with this contingency plan. By using Google Analytics’s annotation feature and conducting frequent reports, you’ll be home and dry. This way you can adapt to any changing circumstances and clearly visualise your progress expressed as a function over time.

Final thoughts on SEO audit

SEO is a potent digital marketing channel and there are various types of audits and associated tools to help you out with this. The greater your understanding of any such analysis is, the better your performance will be. This is owing to the resultant improvements that you’ll be able to implement accordingly. Ultimately, this can boost your visibility and consequently, traffic as well as rankings.